My colleagues and I have been working through this intriguing paper [1] from a few weeks ago:

Yan, G., Vértes, P.E., Towlson, E.K., Chew, Y.L., Walker, D.S., Schafer, W.R., and Barabási, A.-L. (2017). Network control principles predict neuron function in the Caenorhabditis elegans connectome. Nature advance online publication.

This seems like a very important contribution. It promises detailed insights about the function of a neural circuit based on its connectome alone, without knowing any of the synaptic strengths. The predictions extend to the role that individual neurons play for the circuit’s operation. Seeing how a great deal of effort is now going into acquiring connectomes [2] – mostly lacking annotations of synaptic strengths – this approach could be very powerful.

The starting point is Barabási’s “structural controllability theory” [3], which makes statements about the control of linear networks. Roughly speaking a network is controllable if its output nodes can be driven into any desired state by manipulating the input nodes. Obviously controllability depends on the entire set of connections from inputs to outputs. Structural controllability theory derives some conclusions from knowing only which connections have non-zero weight. This seems like a match made in heaven for the structural connectomes of neural circuits derived from electron microscopic reconstructions. In these data sets one can tell which neurons are connected but not what the strength is of those connections, or even whether they are excitatory or inhibitory. Unfortunately the match is looking more like a forced marriage…

1. Linearization around a fixed worm: An early step in the modeling of the worm is to linearize the dynamic equations around a fixed point (Eqn 2), where all the variables are stationary. Why a fixed point? All the measurements here are taken on swimming worms. In the phase space of muscle activations the worm executes a closed trajectory, rather than sitting at a fixed point. It seems more appropriate to analyze perturbations about this closed trajectory.

Is linearization even justified at all? The swimming worm operates in a fully nonlinear regime, with the motorneurons depolarized and hyperpolarized all the way. As the authors say, a linearly controllable system is also nonlinearly controllable. But the logic does not work in the other direction [4], so conclusions about non-controllability from a linear approximation cannot be carried over to the nonlinear system. This, however, is what the article does all the time.

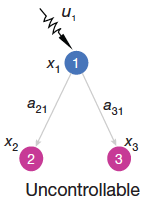

2. All neurons are perfect integrators: The next set of difficulties emerges in the article’s tutorial material. In Figure 2a we are shown one input neuron that drives two output neurons, and we are told that the output neurons are not controllable. This seems odd. Suppose for example that one of the output neurons responds better to low frequencies and the other to high frequencies, then one can design an input signal that drives each output independently of the other.

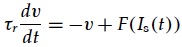

Digging into the figure caption we find that the derivatives of the 2 output neurons are strictly proportional to each other, which doesn’t seem right either. What this relation implies is that the neurons have no internal dynamics. The diagonal matrix elements a22 and a33 in Eqn (2) are assumed to be zero. In other words the neurons must be perfect integrators with infinite time constant. That doesn’t describe any real neural system. Approximately every model of a point neuron (i.e. with just one dynamical variable) starts with

where is the neuron’s integration time, and

is the synaptic current produced by inputs from other neurons [5, Ch 7]. Typical integration times range from milliseconds to hundreds of milliseconds, but they are not infinite. In fact, an entire subfield of neuroscience is contemplating how brain circuits can sustain stable activity patterns with neurons that only have short-term dynamics.

Digging into the paper’s supplement we find that the theory could be rescued if is finite but identical for every neuron in the circuit. Again, this is a patently unrealistic assumption: Neural networks have diverse components with many time constants even within the same circuit [6], and especially circuits for pattern generation benefit from elements with different dynamics. For example, a computational model for the C elegans swimming circuit [7] uses point neurons with time constants that range over a factor of 3.5. So the condition imposed here that all the time constants be identical just doesn’t apply to any real neural systems, or as the authors might say (see Suppl II.A) only to “some pathological cases for which the algebraic variety in parameter space has Lebesgue measure zero”.

Note that this problem with “structural controllability theory”, namely the insistence on nodes without internal dynamics, was spotted shortly after Barabási’s 2011 paper [3], and the resulting claims have already been refuted in the literature [8]. It was pointed out that in a system where the nodes are allowed to have dynamics a single time-dependent input is sufficient to make the network “structurally controllable”. Why does that same theory get dished up again, with all the same deficiencies?

3. Experimental measurements incommensurate with theory: Suppose we overlooked this fatal flaw and got to the point of considering experimental data against the theory’s predictions. The theory’s output is categorical: either a set of nodes is controllable or it is not. That is because the theory’s inputs are categorical: either two nodes are connected or not. Because no quantitative information is available about the strength of synapses in the network, we can expect no quantitative predictions about the network’s dynamics. So we must look for experimental proof that the worm has gone from controllable under one condition to uncontrollable under another. Nothing of the sort happens in the reported experiments.

The worms swim just fine both before and after the network perturbations that are supposed to cause uncontrollability. There are small changes in the shape of the swimming worm (Figs 2f and 3b-c, example above): All the systematic effects of neuron ablation are smaller than the natural variation within a set of normal worms. So if the normal worms (red dots) are considered controllable, the perturbed worms (green dots) are controllable too. There is no logical connection between a small quantitative change in the worm’s swimming movements and the all-or-nothing predictions from structural controllability theory.

One might ask whether measurements of the type reported here can serve to test controllability even in principle? In the intact worm 89 muscles are independently controllable, so their activations could fill an 89-dimensional volume. But the measurements cover only a 4-dimensional subspace spanned by the “eigenworms”. How can one assess controllability of the system by observing only a tiny fraction of its degrees of freedom? Suppose a perturbation of the network leads to loss of one of those 89 dimensions. For that effect to be detected, the lost dimension must reside inside the 4-dimensional subspace of the measurements. Again that happens only in “some pathological cases for which the algebraic variety in parameter space has Lebesgue measure zero”.

Summary: It seems that “structural controllability theory” doesn’t apply to real neural systems, and that the experimental measurements reported here have no logical relation to the predictions of the theory. So this paper leaves plenty of room for discoveries, and the problem of linking connectomes to functional predictions remains an open challenge.

[1] Yan, G., Vértes, P.E., Towlson, E.K., Chew, Y.L., Walker, D.S., Schafer, W.R., and Barabási, A.-L. (2017). Network control principles predict neuron function in the Caenorhabditis elegans connectome. Nature advance online publication.

[2] Swanson, L.W., and Lichtman, J.W. (2016). From Cajal to Connectome and Beyond. Annual Review of Neuroscience 39, 197–216.

[3] Liu, Y.-Y., Slotine, J.-J., and Barabási, A.-L. (2011). Controllability of complex networks. Nature 473, 167.

[4] Coron, J.-M. (2007). Control and Nonlinearity (American Mathematical Society).

[5] Dayan, P., and Abbott, L.F. (2001). Theoretical neuroscience: computational and mathematical modeling of neural systems (Cambridge, Mass.: MIT Press).

[6] Neuroelectro.org

[7] Karbowski, J., Schindelman, G., Cronin, C.J., Seah, A., and Sternberg, P.W. (2008). Systems level circuit model of C. elegans undulatory locomotion: mathematical modeling and molecular genetics. J Comput Neurosci 24, 253–276.

[8] Cowan, N.J., Chastain, E.J., Vilhena, D.A., Freudenberg, J.S., and Bergstrom, C.T. (2012). Nodal Dynamics, Not Degree Distributions, Determine the Structural Controllability of Complex Networks. PLOS ONE 7, e38398.

I really would love the authors to comment on this. We looked at the paper a bit and feel very similar about its contributions. But what do you make about the fact that they correctly predicted a new neuron having a role for movement? Would we know what the baseline probability is (i.e. of the neurons that exist, which proportion would have such an effect)?

Also, I would be curious if they pre-registered their study. Did they previously state that they will try to ablate that one neuron (PDB) and no others? I am a bit concerned about the non-availability of their code (what does reasonable request mean?). In 2017 all code belongs on github. If it was, I could personally look for signs of p-hacking.

LikeLike

Towlson and colleagues have now posted some explanations and code for simulations here: https://arxiv.org/abs/1805.11081.

LikeLike

There seems to be precedent for research on networks which doesn’t really add up, from one of the authors:

https://liorpachter.wordpress.com/2014/02/10/the-network-nonsense-of-albert-laszlo-barabasi/

LikeLiked by 1 person

I doubt that there was any p-hacking going on, but in a real way the prediction of PDB’s involvement is relatively trivial. “We predict that control of the muscles requires 12 neuronal classes… including PDB, for which there was not previously available ablation experiment data.”

PDB is a motorneuron.. I think we would reasonably predict it has a non-specified impact the behavior of some muscle cell just by staring at the wiring. We might even make the less trivial prediction using, as described in the paper, “simple connectivity-based predictions” and some trivial anatomy that it might impact behavior specific to dorsal/ventral body posture in the posterior part of the body, given that it preferentially connects to dorsal and posterior body wall muscle cells. In fact, such a prediction WAS made in Jarell et al., 2012, at least for the male worm. (see also http://www.wormatlas.org/neurons/Individual%20Neurons/PDBframeset.html). This is a more specific and more testable prediction than provided by the control theory analysis.

More interesting are maybe the predictions as stated that DD04, DD05 and DD06 should impact behavior if ablated singly, whereas DD02 and DD03 should not.

The problem being that this is not really exactly what the control theory predicts. I think it more specifically predicts that, if individually ablated, some neurons should reduce the number of muscle cells individually controllable in response to signal modulation specifically from PLM cells… The test itself is weak in that we don’t observe if any muscle cells are individually controlled, and aren’t actually doing much if anything to modulate the inputs from PLM. You can certainly get posture changes if muscle cells are controlled in groups (left-right pairs, for instance, or larger groups). From my shallow understanding, we would have to more broadly explore both the space of inputs from PLM and the behavioral state space to test the real predictions.. e.g., what about when the animals are starved or satiated, in the presence of predators, in a patch of poisonous bacteria, etc..). It is really hard to disprove that they can’t “control” the muscles under some set of circumstances.

Another thing I found strange is the very coarse scale nature of the prediction (ablating these neurons singly should impact some unspecified aspect of the “controllability” of unspecified individual muscle cells) vs. the extremely fine scale nature of the test results (“Negative forward eigenprojection 4” is higher than mock ablated).

Does all this mean that this incarnation of control theory (including questions about the validity of certain assumptions in the math) or something similar is useless or invalid? If you look at it as a pattern detection algorithm for networks and ignore the loaded language of “control” that is easily misinterpreted, it could be salvaged. Certainly, there are other approaches applied widely in biology where the underlying assumptions are known to be laughably wrong (almost anything applied to gene sequence data, point neuron models, etc. etc. etc.) but they still pick up useful patterns in complex data, and generate solid hypotheses that help us to better understand the world.

However, thinking of it as a way of highlighting otherwise difficult to see patterns, it still falls a little flat in this case.. It doesn’t pick up anything in the parts of the network that we think of as “interesting”, the parts that are integrating multiple sensory modalities for instance, and seems to almost exclusively pick out things directly connected to muscle cells. If you are interested in complex circuit function, it seems biased against seeing almost anything where the “good stuff” happens. Hypotheses that generated by “simple connectivity based predictions” are more specific and more testable, two good criteria for a good hypothesis.

It is a tough business trying to find useful patterns in network data when you are more interested in function than in statistical graph properties. Maybe before dismissing it entirely, someone should try and see if, as a framework, it could be made to work better. It DOES feel like it wants to help answer questions we are interested in and that other flavors of graph theory fail to do, e.g. “Which neurons might be important for circuits between these specific sensory inputs and these specific network outputs.”

Sorry for the book length response, and thanks for the great post.

LikeLike

just for completeness, it bother’s me that people call it “barabasi’s structural controllability.” structural controllability is a very useful and elegant finding for many [linear] systems, and credit should be given to its original discoverer, Ching-Tai Lin. he published the result in 1974! 🙂 http://ieeexplore.ieee.org/document/1100557/

LikeLike

Duly noted. Many thanks for the pointer.

LikeLike

Great commentary! Very useful caveats to the use of linear systems theory to (any) real system.

I might also note that while the output of a linear system is considered controllable iff the output controllability matrix has full rank, the comment under section 3 “The theory’s output is categorical: either a set of nodes is controllable or it is not,” is technically untrue.

The theory’s output is an upper and lower bound on the rank of the output controllability matrix, which is a number between 0 and the number of outputs (i.e. number of muscles). This rank is the dimension of the output space (i.e. 95 dimensional muscle-space) that can be traversed with linearly independent inputs, and is geometrically intuitive. The use of structural control theory is to demonstrate this quantitative non-binary number is robust to network geometry.

Thus, given a linear system where we measure all of the muscles independently, a decrease in this rank (which is the control metric computed by the authors) corresponds to a decrease in the accessible output space. I agree that only observing a 4 dimensional subspace is far from ideal.

LikeLike